Architecture

The ./HAVOC platform is built on top of several services that are available in Amazon Web Services. This section describes the underlying services that run each of the ./HAVOC platform components.

Deployment Resources

A ./HAVOC deployment is built on top of five primary AWS services:

- API Gateway

- Lambda

- DynamoDB

- S3

- Elastic Container Service

Interactions with the ./HAVOC API come in through API Gateway and depending on the endpoint requested, are directed to one of several Lambda functions to carry out the requested operation.

API Gateway REST API

When creating a ./HAVOC deployment, an AWS API Gateway REST API is created with the name based on the provided deployment name.

Stage

A stage named "havoc" is associated with this API deployment.

Domain Name & Base Path Mapping (Conditional)

If domain naming is enabled, the API Gateway will have a domain name generated based on the deployment name and given domain name. This will utilize a regional endpoint configuration. A corresponding base path mapping is also created to map the REST API to the specified domain name under the "havoc" stage.

Authorizer

A custom authorizer named after the deployment is used for request authorization. It utilizes a Lambda function to authorize requests and requires three headers for the authorization:

x-api-keyx-signaturex-sig-date

Resources & Methods

The following API resources are created:

/manage/remote-task/task-control/playbook-operator-control/trigger-executor/workspace-access-get/workspace-access-put

For each of the resources above, there's an associated POST method that uses the custom authorizer for authentication. Each POST method expects request headers x-api-key, x-signature, and x-sig-date.

Lambda Integrations

Each of the API resources (endpoints) has an associated Lambda function integration. The method for these integrations is POST and utilizes the AWS_PROXY integration type. This means that the input to the Lambda function will be the raw HTTP request from the client.

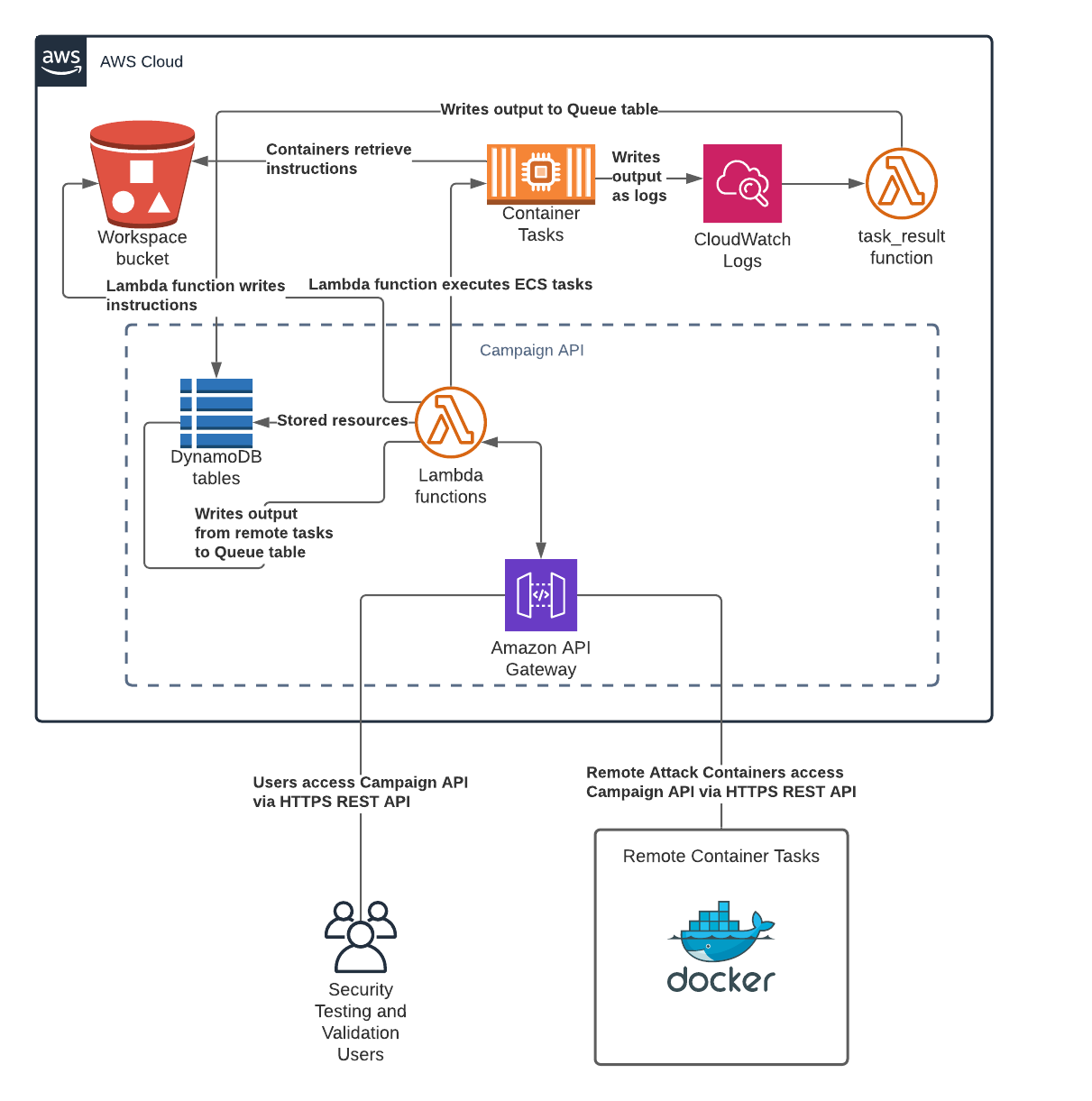

Dynamically generated resources such as users, task types, tasks and portgroups are stored in DynamoDB tables. Users send instructions to container tasks via the task-control API endpoint. container tasks that are launched via the task-control endpoint run in ECS (see Container Tasks section below for more details). When a user sends instructions to a container task via the task-control endpoint, the API writes the instructions as files to a workspace bucket in S3. Container tasks running in ECS retrieve their respective instructions directly from the workspace bucket in S3. When a container task in ECS has results to deliver, it writes its output to a CloudWatch Logs log group that triggers a Lambda function called task_result. When the task_result Lambda function gets new logs from the log group, it performs a bit of cleanup on the log and writes it to a DynamoDB table called "queue" that is used as the results queue. Users can query the API to retrieve the output that is generated by the container tasks from the queue.

Remote container tasks are not assumed to have direct access to S3 or CloudWatch Logs so instead of retrieving instructions from the workspace S3 bucket or writing output to CloudWatch Logs, remote container tasks can register themselves, retrieve their instructions and post their results via the remote-task endpoint of the API. Retrieving instructions via the remote-task endpoint happens by way of the remote_task Lambda function, which pulls the tasks instructions from the workspace bucket in S3 and delivers it to the container task in an HTTP response. When the container posts results to the remote-task endpoint, the remote_task Lambda function performs the same operations that the task_result Lambda function does as described above.

Container Tasks

Container tasks that are launched via the API are executed as tasks in an ECS cluster. When a task is executed, ECS looks to the task definition for container image information and task execution details. ECS then retrieves the latest version of the container image from the ./HAVOC public container registry and executes it as specified in the task definition. Container tasks will obtain a public IP address and have full outbound Internet access. Inbound Internet access can be controlled via "portgroups," which are really just AWS Security Groups that can be managed through the ./HAVOC API.

Go to the Launching Container Tasks page for more details on using container tasks.

Container tasks can also be run remotely on any system that supports the operation of Docker containers but they cannot be launched through the API. Instead, remote container tasks must be launched using the docker run command or via docker-compose. When the container starts up, it registers itself with the API as mentioned above.

Go to the Launching Remote Containers Tasks page for more details on using remote container tasks.

Workspaces

Workspaces are not a significant component of the overall architecture but they deserve a special mention because all tasks have access to a "shared" directory within the workspace bucket that can be used to share files between container tasks. Remote container tasks can send files to and retrieve files from the shared workspace via the remote-task endpoint.

Go to the Interacting with Container Tasks section of the Usage Through CLI Console page or Interacting with Container Tasks section of the Usage Through SDK page for more details on sharing files through workspaces.

Architecture Diagram

This is a visual representation of the overall architecture:

Updated over 2 years ago